In a recent video about the iPhone 16 Pro, specifically its camera specifications and features, photography Tony Northrup of the popular YouTube channel Tony & Chelsea Northrup called the iPhone 16 Pro camera “disappointing and misleading,” opening a complex can of worms concerning megapixels, resolution, image sensor designs, and image quality at large.

At the core of Northrup’s video is the claim that Apple’s 48-megapixel cameras don’t really take 48-megapixel photos.

“The 48-megapixel resolution is absolutely fake,” Northrup says while alleging that the iPhone 16 Pro delivers “around six megapixels of actual detail in ideal circumstances.”

Although there are some conclusions that, per industry experts, go way too far and are misleading, Northrup touches on some interesting things mobile photographers should consider.

Megapixels Don’t Tell the Entire Story

Calling the iPhone’s 48 megapixels “fake megapixels” doesn’t make much sense. A megapixel is a simple, objective measurement. If a 48-megapixel image has 48 million pixels, it’s a 48-megapixel image.

However, saying — and demonstrating — that a 48-megapixel image from an iPhone or any smartphone doesn’t look as sharp as a 48-megapixel image from a full-frame interchangeable lens camera is accurate. This is not an all-else-equal situation. Fundamentally different things happen with a smartphone versus a full-frame camera that impact how good an image looks.

Quad Bayer Sensors Are Common in Smartphones

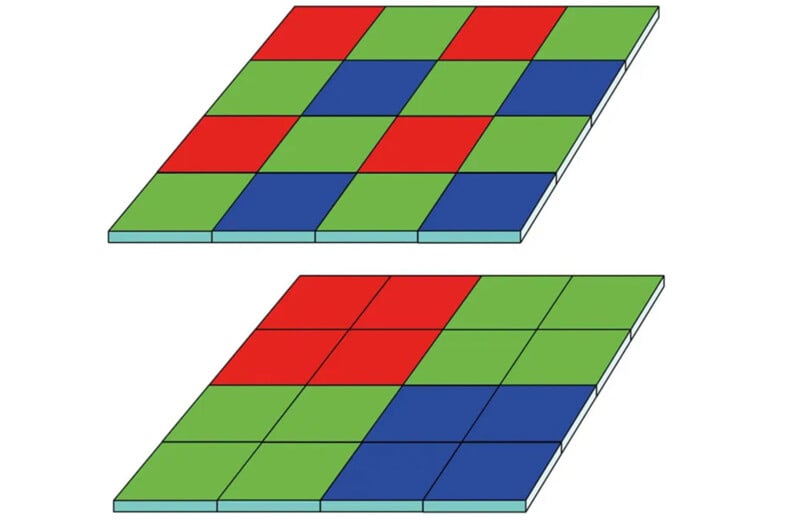

One of the most significant differences is the sensor technology itself. Since a smartphone sensor is much smaller than a Micro Four Thirds, APS-C, or full-frame image sensor, many of the latest smartphones, including the iPhone 16 Pro, use what’s called a Quad Bayer image sensor.

Before diving into this technology, it’s worth briefly describing how an image sensor “sees” color. A camera would only see in black and white without a color filter array. It can only measure light, from none (black) to complete pixel saturation (white). An image sensor requires a color filter array to take color photos.

On a typical dedicated camera, these are Bayer filters, a mosaic of red, green, and blue filters. Each pixel receives light through just one of these three primary colors.

With a Quad Bayer filter, each color on the filter array has not one but four pixels behind it. Essentially, this means four pixels are effectively combined into one. As mentioned in PetaPixel‘s iPhone 16 Pro Review, the iPhone’s 48-megapixel Quad Bayer sensor delivers visible resolution similar to a 12-megapixel sensor.

An Expert’s Take

PetaPixel spoke with Sebastiaan de With, an expert photographer and the co-founder of Lux, the makers of iPhone camera apps like Halide and Kino. De With has watched Northrup’s video and is actively writing his iPhone 16 Pro Review, which, if it’s anything like his iPhone 15 Pro Max Review, will feature a lot of great information, analysis, and awesome iPhone photos.

“iPhones went to 48 megapixels with iPhone 14 Pro — with most 48MP output being limited to ProRAW at first. It’s of course never quite useful to compare an iPhone — or any smartphone! — to a full frame camera, because physics dictates your image output. You can’t defeat physics when it comes to gathering light and getting a certain kind of rendering. That’s why phones tend to compensate in the area they are far superior in: computation. Computational photography has enabled stuff your full-frame camera can’t even dream of, like 10-second handheld nighttime exposures with a sensor the size of your pinkie toe nail or smaller,” the developer and photographer tells PetaPixel.

“I think as photographers, we enjoy comparing specs and outputs, but we’ve always known that megapixels are not a reliable indicator of camera quality. It’s not even an indicator of resolution — resolving detail comes down to the lens and camera attributes and parameters!”

De With argues that if photographers want to measure Apple’s 48-megapixel claims, it’s vital to consider what these claims amount to in terms of real-world improvements.

“Do users get higher resolution photos with more detail? Undoubtedly. Moving further, can you say that it truly offers four times the detail of the 12-megapixel sensors that precede it?”

“While it’s a Quad Bayer arrangement that optimizes for 12- megapixel (and now 24-megapixel) output — which makes fantastic sense for 99% of iPhone users’ photos and videos — I would go on a limb and say it is.”

“Why? Because almost every pro photographer I have seen use it has been impressed with the output of the virtual ‘2×’ lens that is included, which is using a center portion crop of the 48MP sensor and some smart processing to give you a sorta-arguably-optical extra lens.”

De With admits that these images aren’t quite as detailed as other full-sensor outputs, which he’ll discuss in his iPhone 16 Pro Review next week, he argues the virtual 2x lens goes “toe-to-toe with previous 2x telephoto outputs on iPhones in many cases — which were 12-megapixel sensors with actual lenses.”

Ultimately, claiming that the iPhone 15 and now 16’s higher resolution is “misleading” or only real on paper is “dubious,” per de With.

“People use [the 48-megapixel iPhone camera] every day and get higher resolution shots with more detail. Whether it’s the same resolving power as a full-frame camera? Probably not. But that’s comparing an Apple to an orange. Or Sony.”

More Megapixels Doesn’t Necessarily Mean Better Image Quality

Northrup’s video includes some great points, but suggesting Apple is misleading customers is a bridge too far.

That said, the video touches on something important and a drum worth beating until everyone gets on the same page, including manufacturers themselves: Megapixels are not a suitable stand-in for image quality because not all pixels are made equal. Only in a vacuum do megapixels matter all that much, and we don’t take photos inside a vacuum.

“We the consumers tend to think that more megapixels means more image quality. That’s what we really want, right?” Northrup says.

“But it is not true, especially with the tricks Apple and other smartphone manufacturers are pulling. Apple is producing images with six megapixels of detail packed into a 48-megapixel file,” Northrup continues. “They’re making a huge file with absolutely no image quality benefit to it.”

And therein lies the rub. Megapixels don’t describe image quality — they describe pixel measurements of images. They say nothing of the quality of pixels.

Frankly, getting bogged down in a discussion on megapixels and whether Apple is lying to people misses the much more critical point: iPhone camera technology, regardless of how Apple describes it (mostly accurately, in my view), has significantly improved in recent years. Focusing on how an iPhone’s 48-megapixel photo isn’t as good as a full-frame camera with a similar megapixel count completely ignores how much better the iPhone 16 Pro is than older smartphones and many competing smartphones when it comes to photography and video.

Obviously a full-frame camera with a dedicated lens with large, specially-designed optics produces better-quality images than a smartphone camera. And just as obviously, I’d have thought, just because a smartphone creates a 48-megapixel image doesn’t mean it will compete favorably against a 48-megapixel full-frame camera. Sensor size, and thus pixel size, dramatically impacts image quality. As Chris Niccolls has said a few times, there’s no replacement for displacement. There’s also no perfect substitute for high-end glass.

But how we get from there to essentially, “Apple lies and the iPhone is a six-megapixel camera,” I don’t know. It makes little sense to compare image quality from two sensors that are so different. Comparing the iPhone 16 Pro — or iPhone 15 Pro, in Northrup’s case — to a full-frame Sony camera instead of other smartphones is pointless unless the objective is to show that a full-frame camera can take higher-quality images than a smartphone in many situations. Of course it can.

Painting Apple as deceptive because of that simple fact is playing with fire, and as some of the responses to Northrup’s video show, leads people to make odd conclusions, like suggesting Apple has inflated the megapixel count to increase file size and sell more iCloud storage to people, despite the fact Apple has just embraced JPEG XL compression to reduce file size or that Apple is outright tricking people and should be investigated.

Perhaps Apple opened itself up to these sorts of flawed comparisons, though. After all, Apple routinely touts the photographic capabilities of its iPhone models, showing how the devices can take beautiful, amazing photos. Oh, wait, they can take awesome images. That part of Apple’s marketing is bang on.

Image credits: Featured image courtesy of Apple