![]()

A week after Meta CEO Mark Zuckerberg was grilled during a Senate hearing on child safety, the company announced new protections for teens navigating sextortion scams.

Meta will bolster its involvement in the Take It Down program, which was created by the National Center for Missing & Exploited Children (NCMEC), by expanding support from just English and Spanish to 25 languages. The program allows users to assign a unique hash of explicit or intimate images taken of a minor. The hash, not the actual content, can then be sent to NCMEC, which then helps participating companies like Meta remove the offending content. This works for minors worried about images or videos posted or that could be posted online, a trusted adult or parent on behalf of the young person, or adults who are concerned about content taken of them when they were underage.

“Having a personal intimate image shared with others can be devastating, especially for young people. It can feel even worse when someone threatens to share it if you don’t give them more photos, sexual contact or money — a crime known as sextortion,” Meta explains in its release.

The language and timing, so soon after the Senate hearing in which sextortion scams came up multiple times, feel like Meta’s reassurance that it is taking the issue seriously.

Of course, that might have been more effective were this not taken almost entirely word for word from its release announcing the rollout of the Take It Down program last year.

“Having a personal intimate image shared with others can be scary and overwhelming, especially for young people. It can feel even worse when someone tries to use those images as a threat for additional images, sexual contact or money — a crime known as sextortion,” Meta said in its February 2023 release.

Meta also said it partnered with the nonprofit Thorn, which focuses on technological solutions to defend against child sexual abuse, to “develop updated guidance for teens on how to take back control if someone is sextorting them.”

“It also includes advice for parents and teachers on how to support their teens or students if they’re affected by these scams,” the release adds.

These resources can be found under Meta’s “Sextortion Hub” in the “Safety Center.”

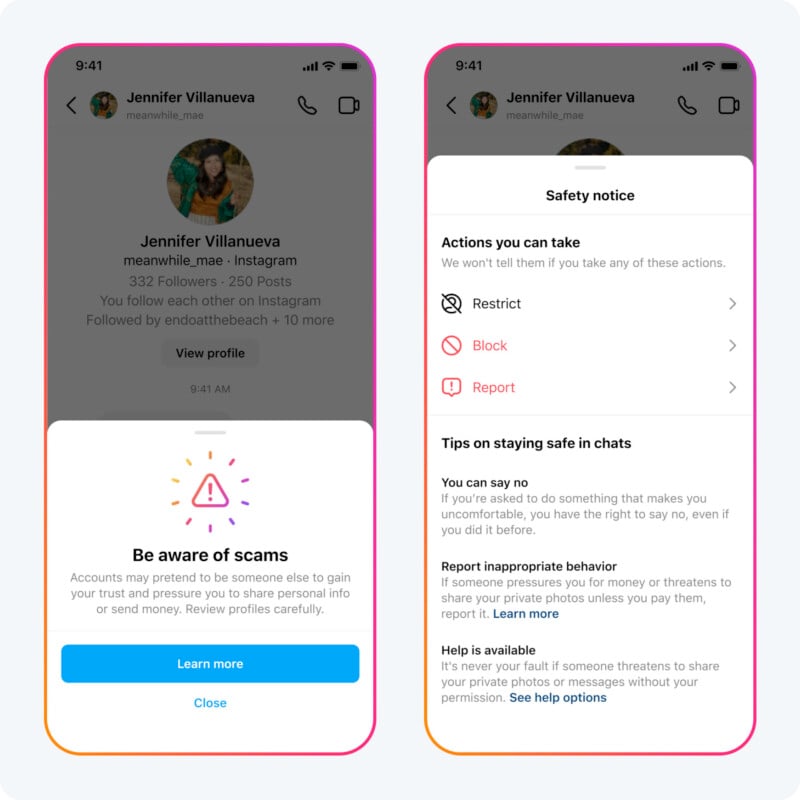

Additionally, Meta will show “Safety Notices” when a user messages an account that “has shown potentially scammy or suspicious behavior,” according to the release. This can open up more resources for the user, allowing them to restrict, block, or report the potentially malicious account or bring up more information.

Image credits: Header photo licensed via Depositphotos.