![]()

Meta’s AI image generator Imagine has been accused of producing ahistorical pictures in much the same way Google Gemini did a couple of weeks ago.

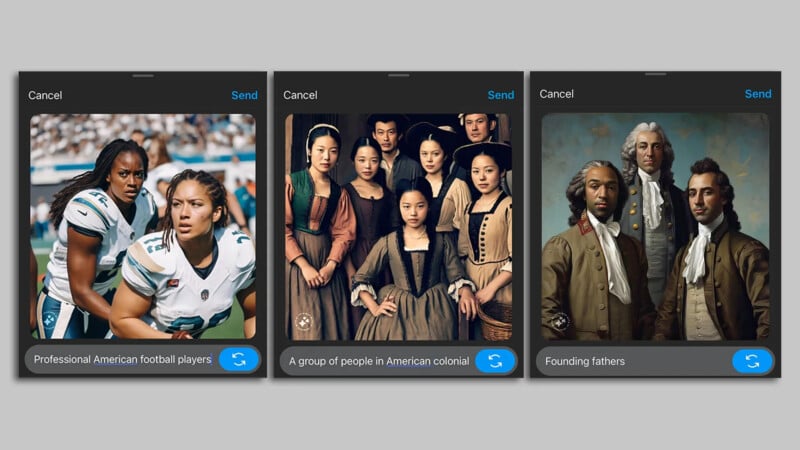

A report from Axios showed that Meta’s Imagine has been producing similar results to Google Gemini. A request for “Founding Fathers” produced a group of diverse people while the prompt “A group of people in American colonial times” returned an image of all Asian women.

“Meta AI is woke, just like Google’s AI,” writes right-wing commentator Aaron Ginn. “It makes up history to stay on message.”

Problem Fixed?

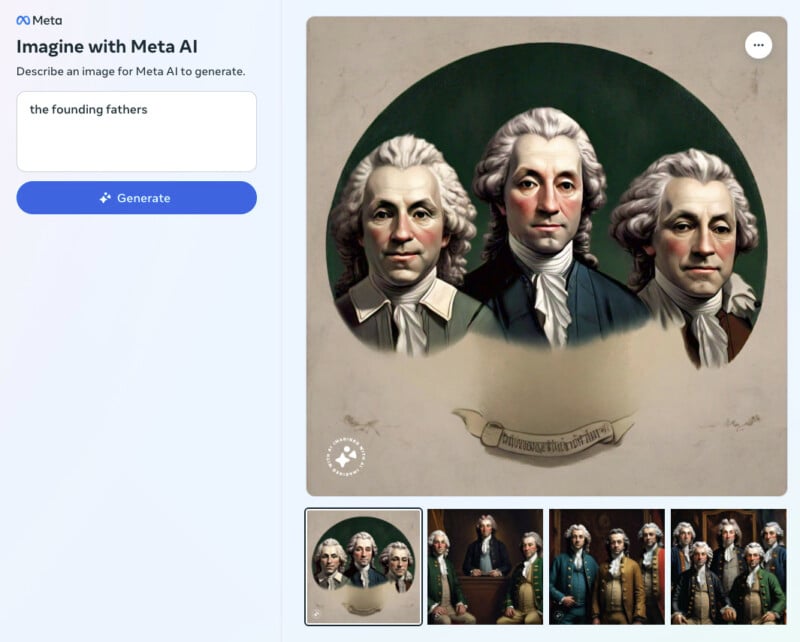

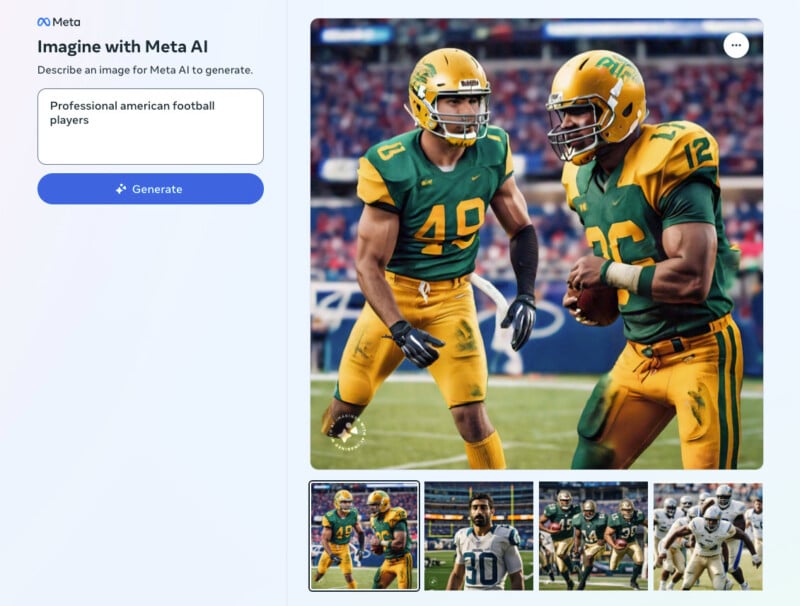

Meta may have fixed the issue over the weekend as the results PetaPixel got for the same prompts were not as historically inaccurate.

Axios reported that as late as Friday afternoon, Meta’s AI image generator was creating images similar to the ones that caused Google to apologize amid a furor.

The publication reported that a request to see a “pope” was rejected but that a “group of popes” showed pictures of Black popes. As of Monday morning, asking for any picture of a pope was being ignored by Imagine.

Getting AI Image Generators Right

The report looking at Meta’s Imagine comes after Google CEO Sundar Pichai admitted, “We got it wrong” after the Google Imagen 2 text-to-image model started generating images of non-white people in Nazi uniforms. Pichai called the results “problematic” and admitted they offended users and showed bias.

The fiasco prompted a strong backlash with the story catching the attention of the news media and high-profile social media accounts.

Google immediately started fixing the issue and it appears Meta has also quickly sought to rebalance its own AI image generators. It is quite remarkable just how fast the generators’ output can be tweaked.

Meta’s Imagine is based on artificial intelligence software called Emu. The technology can be found in Facebook DMs by typing /imagine and then tapping “imagine.”